Implement a Least Recently Used Cache in Go.

Introduction

A cache is a temporary storage area that holds frequently accessed data to reduce the need for costly retrievals from slower sources, such as databases or external APIs. An LRU cache implements a specific eviction policy that removes the least recently used item when the cache reaches its capacity.

Purpose in Optimizing Data Access:

Reduced latency: By storing frequently accessed data in memory, LRU caches can significantly decrease the time it takes to retrieve that data, leading to faster application response times and a smoother user experience.

Reduced network traffic: By minimizing the need to fetch data from external sources repeatedly, LRU caches can conserve network bandwidth and reduce strain on backend systems.

Improved scalability: LRU caches can help applications handle more requests and scale more effectively.

Enhanced user experience: Faster data access and reduced latency often translate into a more responsive and enjoyable user experience, especially for applications that rely heavily on data retrieval.

Common use cases where LRU caches are beneficial

Web Servers:

Caching static assets: Images, CSS stylesheets, JavaScript files, and other static resources are cached to reduce server load and improve page load times.

Session data management: User session data, such as login information and shopping cart contents, can be cached for quick retrieval and improved user experience.

Database Systems:

Query caching: Results of frequently executed database queries can be cached to reduce database load and enhance query performance.

Row-level caching: Individual rows or data objects are cached to accelerate data retrieval and updates.

File Systems:

Caching file metadata: Information about files and directories can be cached to speed up file operations and reduce disk I/O.

Caching file content: Frequently accessed file content can be cached in memory for faster reads, especially for files or frequently accessed parts of larger files.

LRU Algorithm Core Principle

- Evicting Least Recently Used Items: When the cache reaches its capacity, the LRU algorithm removes the item that is least Retrieved recently to make room for new items. This prioritization uses the assumption that recently accessed items are more likely to be needed again shortly.

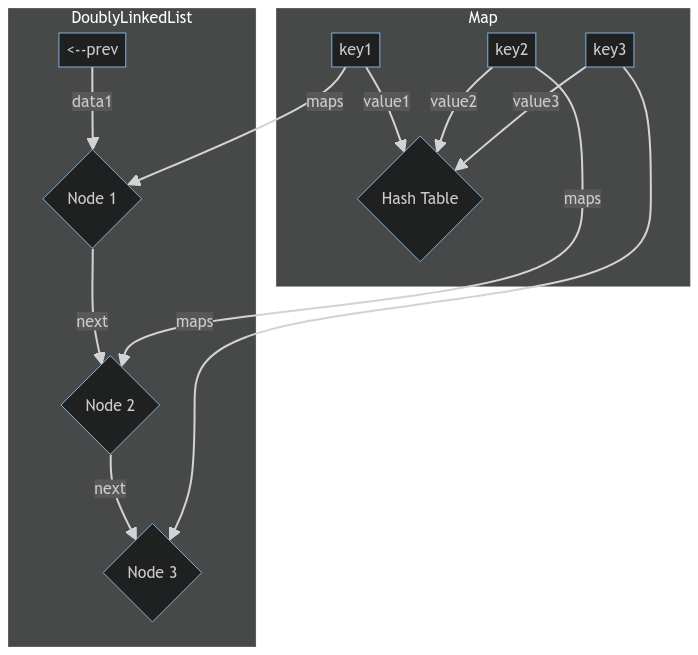

Key Data Structures

Map (Hash Table):

Stores key-value pairs for efficient retrieval.

Provides fast lookups by key, typically in O(1) time.

Maps keys to corresponding nodes in the doubly linked list.

Doubly Linked List:

Maintains the order of items based on their access time.

The head of the list represents the most recently used item, while the tail represents the least recently used.

Nodes are moved to the head of the list when accessed, ensuring the LRU eviction policy.

LRU Cache Visualisation

Implementation Steps

Define Data Structures:

node: Represents a key-value pair in the cache.

value: The value stored in the cache.

key: The key associated with the value.

prev: Pointer to the previous node in the doubly linked list.

nxt: Pointer to the next node in the doubly linked list.

package main

type node struct {

value int

key int

prev *node

nxt *node

}

cache: Represents the LRU cache itself.

hashMap: A map to store key-value pairs for efficient lookup.

capacity: The maximum number of items the cache can hold.

head: Pointer to the head of the doubly linked list (most recently used).

tail: Pointer to the tail of the doubly linked list (least recently used).

type cache struct {

hashMap map[int]*node

capacity int

head *node

tail *node

}

Implement a function to return a cache:

Create a new cache using the newLRUCache function:

Pass the desired capacity as argument size.

Initialize the hashMap, capacity, head, and tail fields.

func newLRUCache(size int) *cache { headNode := &node{ prev: nil, } tailNode := &node{ prev: headNode, nxt: nil, } headNode.nxt = tailNode return &cache{ capacity: size, hashMap: make(map[int]*node), head: headNode, tail: tailNode, } }Test for

newLRUCache, to be written inmain_test.go.package main import ( "testing" ) func TestNewLRUCache(t *testing.T) { // Test case 1: Create a new LRU cache with size 3 cache := newLRUCache(3) // Check if the cache initialized correctly if cache.capacity != 3 || cache.head == nil || cache.tail == nil || cache.head.nxt != cache.tail || cache.tail.prev != cache.head { t.Error("NewLRUCache initialization failed.") } // Test case 2: Create a new LRU cache with size 0 emptyCache := newLRUCache(0) // Check if the cache is initialized correctly for size 0 if emptyCache.capacity != 0 || emptyCache.head == nil || emptyCache.tail == nil || emptyCache.head.nxt != emptyCache.tail || emptyCache.tail.prev != emptyCache.head { t.Error("NewLRUCache initialization failed for size 0.") } }

Implement Core LRU Operations:

addNode(n node): Adds a node to the head of the doubly linked list.// add a new node to the head func (c *cache) addNode(n *node) { nxtNode := c.head.nxt prevNode := c.head prevNode.nxt = n nxtNode.prev = n n.nxt = nxtNode n.prev = prevNode }Test for

addNode(n node):func TestCacheAddNode(t *testing.T) { // Test case 1: Add a node to an empty cache cache := newLRUCache(3) newNode1 := &node{value: 101, key: 1} cache.addNode(newNode1) // Check if the node is correctly added to the head if cache.head.nxt != newNode1 || newNode1.prev != cache.head || newNode1.nxt != cache.tail { t.Error("AddNode failed for an empty cache.") } // Test case 2: Add a node to a non-empty cache newNode2 := &node{value: 102, key: 2} cache.addNode(newNode2) // Check if the new node is correctly added to the head and linked to the previous head if cache.head.nxt != newNode2 || newNode2.prev != cache.head || newNode2.nxt != newNode1 { t.Error("AddNode failed for a non-empty cache.") } // Test case 3: Add a node to a cache at full capacity newNode3 := &node{value: 103, key: 3} cache.addNode(newNode3) // Check if the new node is correctly added to the head, linked to the previous head, and the tail is updated if cache.head.nxt != newNode3 || newNode3.prev != cache.head || newNode3.nxt != newNode2 || newNode2.nxt != newNode1 || cache.tail.prev != newNode1 { t.Error("AddNode failed for a cache at full capacity.") } }removeNode(n node) bool: Removes a node from the doubly linked list.//Remove a node from the list func (c *cache) removeNode(n *node) bool { prev := n.prev next := n.nxt if prev == nil && next == nil { return false } prev.nxt = next next.prev = prev n.prev = nil n.nxt = nil return true }Test for

removeNode(n node) bool:func TestCacheRemoveNode(t *testing.T) { // Test case 1: Remove a node from a non-empty cache cache := newLRUCache(3) // Add nodes to the cache node1 := &node{value: 101, key: 1} node2 := &node{value: 102, key: 2} node3 := &node{value: 103, key: 3} cache.addNode(node1) cache.addNode(node2) cache.addNode(node3) // Remove node2 from the cache cache.removeNode(node2) // Check if node2 is correctly removed if node2.prev != nil || node2.nxt != nil || node1.prev != node3 || node3.nxt != node1 || cache.head.nxt != node3 { t.Error("RemoveNode failed for a non-empty cache.") } // Test case 2: Remove the node3 from the cache cache.removeNode(node3) // Check if node3 no longer exists in the cache, and the tail is updated if node3.prev != nil || node3.nxt != nil || cache.tail.prev != node1 || node1.nxt != cache.tail || cache.head.nxt != node1 { t.Error("RemoveNode failed for the last node in the cache.") } // Test case 3: Remove a node from a cache with only one node cache.removeNode(node1) // Check if node1 no longer exists in the cache, and the cache becomes empty if node1.prev != nil || node1.nxt != nil || cache.head.nxt != cache.tail || cache.tail.prev != cache.head { t.Error("RemoveNode failed for the last node in the cache.") } // Test case 4: Remove a node that is not in the cache notInCache := &node{value: 104, key: 4} cache.removeNode(notInCache) // Check if the cache remains unchanged after removing a node not in the cache if cache.head.nxt != cache.tail || cache.tail.prev != cache.head { t.Error("RemoveNode failed for a node not in the cache.") } }moveToHead(n node): Moves a node to the head of the list, marking it as recently used.//Move the node in the middle of the list to the head func (c *cache) moveToHead(n *node) { ok := c.removeNode(n) if ok { c.addNode(n) } }Test for

moveToHead(n node):func TestCacheMoveToHead(t *testing.T) { // Test case 1: Move a node to the head in a non-empty cache cache := newLRUCache(3) // Add nodes to the cache node1 := &node{value: 101, key: 1} node2 := &node{value: 102, key: 2} node3 := &node{value: 103, key: 3} cache.addNode(node1) cache.addNode(node2) cache.addNode(node3) // Move node2 to the head cache.moveToHead(node2) // Check if node2 is correctly moved to the head if cache.head.nxt != node2 || node2.prev != cache.head || node2.nxt != node3 || node3.prev != node2 { t.Error("MoveToHead failed for a non-empty cache.") } // Test case 2: Move the last node to the head cache.moveToHead(node1) // Check if node1 has been relocated to the head and the tail is updated if cache.head.nxt != node1 || node1.prev != cache.head || node1.nxt != node2 || cache.tail.prev != node3 { t.Error("MoveToHead failed for the last node in the cache.") } // Test case 3: Move a node in a cache with only one node cache.moveToHead(node3) // Check if node3 is correctly moved to the head, and the cache remains unchanged if cache.head.nxt != node3 || node3.prev != cache.head || node3.nxt != node1 || cache.tail.prev != node2 { t.Error("MoveToHead failed for a cache with only one node.") } // Test case 4: Move a node that is not in the cache notInCache := &node{value: 104, key: 4} cache.moveToHead(notInCache) // Check if the cache remains unchanged when trying to move a node not in the cache if cache.head.nxt != node3 || node3.prev != cache.head || node3.nxt != node1 || cache.tail.prev != node2 { t.Error("MoveToHead failed for a node not in the cache.") } }popTail() node: Removes and returns the least recently used node from the tail of the list.// pop tail func (c *cache) popTail() *node { res := c.tail.prev if res.prev == nil { return nil } c.removeNode(res) return res }Test for

popTail() node:func TestCachePopTail(t *testing.T) { // Test case 1: Pop the tail node in a non-empty cache cache := newLRUCache(3) // Add nodes to the cache node1 := &node{value: 101, key: 1} node2 := &node{value: 102, key: 2} node3 := &node{value: 103, key: 3} cache.addNode(node1) cache.addNode(node2) cache.addNode(node3) // Pop the tail node poppedNode := cache.popTail() // Check if the tail node is correctly popped and returned if poppedNode != node1 || cache.tail.prev != node2 || node2.nxt != cache.tail { t.Error("PopTail failed for a non-empty cache.") } // Test case 2: Pop the tail node in a cache with only one node cache = newLRUCache(1) soleNode := &node{value: 201, key: 1} cache.addNode(soleNode) // Pop the tail node poppedNode = cache.popTail() // Check if the sole node is correctly popped and returned, and the cache becomes empty if poppedNode != soleNode || cache.head.nxt != cache.tail || cache.tail.prev != cache.head { t.Error("PopTail failed for a cache with only one node.") } // Test case 3: Pop the tail node from an empty cache emptyCache := newLRUCache(0) // Pop the tail node poppedNode = emptyCache.popTail() // Check if nil is returned when popping from an empty cache if poppedNode != nil { t.Error("PopTail failed for an empty cache.") } }

Retrieve Values:

get(key int) node: Retrieves a value from the cache and checks if the key exists in the hashMap.If found, move the corresponding node to the head usingmoveToHead(n node)and return it. If not found, returns nil.func (c *cache) get(key int) *node { n, ok := c.hashMap[key] if ok { c.moveToHead(n) return n } return nil }Test for

get(key int) node:func TestCacheGet(t *testing.T) { // Test case 1: Get a node with an existing key in the cache cache := newLRUCache(3) // Add nodes to the cache node1 := &node{value: 101, key: 1} node2 := &node{value: 102, key: 2} node3 := &node{value: 103, key: 3} cache.addNode(node1) cache.hashMap[node1.key] = node1 cache.addNode(node2) cache.hashMap[node2.key] = node2 cache.addNode(node3) cache.hashMap[node3.key] = node3 // Get node with key 2 resultNode := cache.get(2) // Check if the correct node is returned and moved to the head if resultNode != node2 || cache.head.nxt != node2 || node2.prev != cache.head || node2.nxt != node3 { t.Error("Get failed for an existing key in the cache.") } // Test case 2: Get a node with a non-existing key in the cache nonExistingKey := 4 resultNode = cache.get(nonExistingKey) // Check if nil is returned for a non-existing key, and the cache remains unchanged if resultNode != nil || cache.head.nxt != node2 || node2.prev != cache.head || node2.nxt != node3 { t.Error("Get failed for a non-existing key in the cache.") } }

Add or Update Values:

put(key, value int): Adds or updates a key-value pair in the cache and checks if the key exists in the hashMap. If found, update the value and move the node to the head. If not found, create a new node and add it to the head. If the cache is full, evict the least recently used node (using popTail) to make space.func (c *cache) put(key, value int) { n, ok := c.hashMap[key] if ok { c.removeNode(n) } newNode := &node{ value: value, key: key, } c.addNode(newNode) c.hashMap[key] = newNode if len(c.hashMap) > c.capacity { nodeToRemove := c.popTail() delete(c.hashMap, nodeToRemove.key) } }Test for

put(key, value int):func TestCachePut(t *testing.T) { // Test case 1: Put a new node into the cache cache := newLRUCache(3) // Put a new node with key 1 and value 101 cache.put(1, 101) // Check if the node is correctly added to the cache and hashMap if len(cache.hashMap) != 1 || cache.head.nxt.key != 1 || cache.head.nxt.value != 101 || cache.tail.prev.key != 1 || cache.tail.prev.value != 101 { t.Error("Put failed for adding a new node.") } // Test case 2: Put a node with an existing key into the cache cache.put(1, 102) // Check if the existing node is correctly updated and moved to the head if len(cache.hashMap) != 1 || cache.head.nxt.key != 1 || cache.head.nxt.value != 102 || cache.tail.prev.key != 1 || cache.tail.prev.value != 102 { t.Error("Put failed for updating an existing node.") } // Test case 3: Put nodes into the cache until reaching capacity, causing eviction cache.put(2, 202) cache.put(3, 303) cache.put(4, 404) // Check if the eviction occurred and the cache is at its capacity if len(cache.hashMap) != 3 || cache.head.nxt.key != 4 || cache.head.nxt.value != 404 || cache.tail.prev.key != 2 || cache.tail.prev.value != 202 { t.Error("Put failed for reaching cache capacity and eviction.") } }

Usage Example:

package main func main() { cache := newLRUCache(2) // Create a cache with capacity 2 cache.put(1, 10) cache.put(2, 20) val := cache.get(1) // Returns 10, moves node 1 to the head cache.put(3, 30) // Evicts node 2 (least recently used) }

Conclusion

This article explored the key concepts and implementation details of LRU (Least Recently Used) caches in Golang. Here are the key takeaways:

Caching Principle: LRU caches store frequently accessed data in memory to reduce expensive retrieval costs from slower sources. They prioritize recently used items, evicting the least recently used when reaching capacity.

Data Structures: LRU caches typically use a combination of a map for fast key-value lookup and a doubly linked list to maintain the access order.

Implementation Steps: The article outlined the core functions of an LRU cache in Golang, including adding, retrieving, updating, and removing key-value pairs while managing eviction and maintaining the linked list order.

By understanding the principles and benefits of LRU caching and exploring advanced options and practical applications, Golang developers can leverage this powerful technique to enhance performance, optimize resource utilization, and build more efficient and responsive applications.